Posts about stuff relating to airports

Creating a Free Airport Safety Reporting & Management System

Safety reporting is the life-blood of a modern safety management system. In the early days of implementation, a great deal of effort was (and still is) expended in increasing the reporting of safety events (incidents and other occurrences) and hazards. As an industry, we’ve discussed and debated no-blame and just cultures. We’ve promulgated policies and waved flags, telling our team members that we can’t manage what we don’t measure. And we’ve implemented safety occurrence reporting systems to capture all this information.

If we’ve been successful in these endeavours, we’ve then faced a new problem - what do we do with all these reports? A classic case of be careful what you wish for!

Safety Governance Systems

Once upon a time, I went around the countryside auditing aerodrome safety management systems and dutifully asking SMS-related questions of all and sundry. It didn't matter who they were, I asked them what they knew about the aerodrome's SMS, how they managed risks, and what did they do to make sure everything was being well managed. I didn't ask everyone the exact same questions, like asking the guy mowing the grass how he ensured enough resources are available to manage safety, but I did bang the SMS gong at/to anyone who was around or would listen. I'm not so sure that was the right approach.

Noun-based Regulation

The modern world is definitely in love with its noun-based activities. Each week, a paradigm-shifting approach to some human endeavour is announced with a title like value-based health care or outcome-based education. When I delve into the details, I am generally left either confused as to what they are selling or how they are different at all. Regulation is no different. Just plugging "based regulation" into Google yields, on the first page alone, principle-based, results-based, performance-based, outcomes-based and output-based regulatory approaches.

Trust & Accountability

Recently, I sat in on a presentation on a subject I know quite a bit about. I like doing this as it is typically good to get a different perspective on a familiar subject. In this instance, it wasn't so much the actual subject matter but a couple of associated topics which got stuck in my mind.

A World without Reason

Recently, I have felt like I'm in danger of becoming complacent with the bedrock of my chosen field. I'll admit that in the past, I've been fairly vocal about this bedrock's limitations and mantra-like recitation by aviation safety professionals the world over. But the recent apparent abandonment of this concept by one of the first Australian organisations to go "all-in" on it, gave me cause for reflection. I am, if you haven't guessed it, talking about the "Reason Model" or "Swiss Cheese Model".

Unnecessary Segregation or Pragmatic Isolation?

I've been out in the "real" world for the past six months or so and in that time, my thinking on risk management has changed a little bit. So here it comes, a confession... I have being using a PIG recently and I have felt its use has probably helped with effective management of overall risk.

No Man is an Island

I've been a bit out of the loop over the past couple of months as I try to get a handle on my new job and the (almost overwhelming) responsibility that goes along with it. But I can't ignore the action over at the Federal Senate's Rural and Regional Affairs and Transport References Committee's inquiry into Aviation Accident Investigations.

Image by https://fshoq.com

BTIII: Assessing Uncertainty

I can't lie to you. I have been turning myself inside out trying to get a handle on risk evaluation in the aviation safety sphere for close to five years now and I still don't feel any closer to an answer. And I say "an" answer and not "the" answer. Since you are always assessing risk in terms of your objectives, there can and will be multiple approaches to assessing the risk of the same scenario depending on whether you are considering your safety, financial or legal objectives.

Systems Modelling

When I joined the aviation safety regulator I was introduced to the concept of systems-based auditing (SBA). Before this I had been carrying out aerodrome inspections and I thought becoming an Aerodrome Inspector for the government was going to be more of the same. How wrong I was! Even after four years, my concept of systems-based auditing is still evolving. I coming to discover, and it seems everything I read will attest, that most things in life tend to be more complex than we initially think - SBA is no different.

Image credit: Jeremy Waterhouse (via Pexels)

Regulation, The Final Frontier?

The week before last, I finished a 4-year stint with the aviation safety regulator. Even though I'm heading back to industry, I'm not going to stop writing this blog. I believe that the role of the national regulator is the next safety frontier (not the last ;)) and I like the idea of exploring new territory. As the industry continues to explore concepts like safety management, systems-based this, risk-based that and outcome-based whatchamacallit as well as safety culture, we are all going to come to the realisation that safety can be greatly affected (more than we ever imagined) by the approach and actions taken by a national regulator.

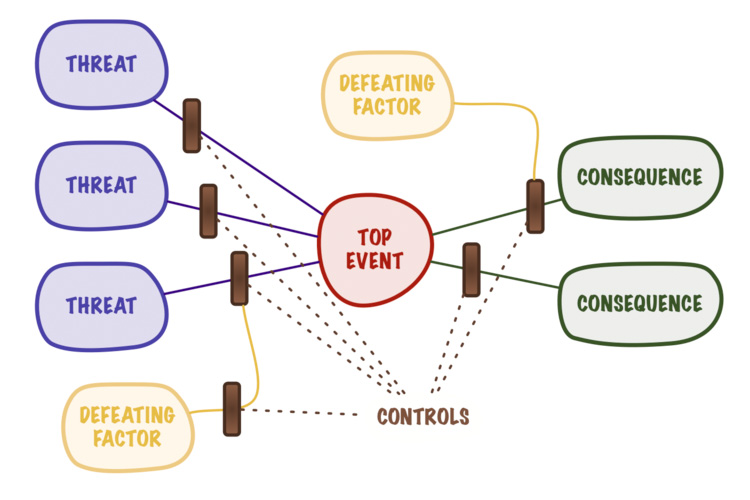

BTII: Control-freak*

As a follow-on to my first post on the Bow-Tie risk assessment method, I thought I'd concentrate on controls (or barriers or whatever else you would like to call them). This is, after all, where all the action happens. Risk controls are how we spend most of our time - they are the practical aspect of managing risk.

Lessons from Taleb's Black Swan

Having just finished reading Nassim Taleb's The Black Swan, I initially thought about writing a not-so-in-depth assessment of the book's positive and negative points - but I'm not much of a book reviewer and a comprehensive critique is probably beyond my capabilities (at this stage). So, instead I thought I would focus on just a couple of the book's significant concepts and explore how they may apply in the aviation context.

BTI: Dressing up for Risk Assessments

I've been doing a lot of pondering on the Bow-Tie method of risk assessment for a project at work. Bow-Tie is a tool used by many, especially in the oil & gas industry, to create a picture of risk surrounding a central event. It's got a few positives and a few negatives but these can be overcome if you understand the limitations of the model being used.

Crowd-sourced Certifications

I've just been mucking around with a new Internet service called Smarterer. That's not a typo, it really is Smarter-er. I guess, in a nutshell, it's an online quiz creator which is meant to help you quantify and showcase your skills. The twist in this implementation is that the quizzes are crowd-sourced. Anyone can write questions for the quiz and thus over time, the group interested in the topic defines the content and the grading of the quiz.

Under Thinking Just Culture and Accountability

I am definitely capable of over thinking, of tying myself up in knots and being lost in the detail. And other times, I probably haven't thought enough. Recently, I identified just culture as a concept I hadn't really thought about in-depth.

In my mind, I thought I knew what a just culture was. I knew it was more than a simple no-blame policy. I knew it involved establishing what is acceptable and not acceptable behaviour. But that had been the limit of my thinking.

SMS Considered

While in Bali talking Runway Safety with a wide range of industry personalities, I found myself at the hotel bar talking SMS with Bill Voss from Flight Safety Foundation. The topic was obviously on Bill's mind because upon my return, I found his latest president's piece in FSF's AeroSafety World to be a good overview of his main SMS points. Some of these points have been on my mind too. Since I'm not one to recreate the wheel (providing it works and is fit for purpose), I'll use some of Bill's well-formed words to kick this off.

Image credit: Nick Bondarev (via Pexels)

Logical Fallacies in the Safety Sphere

Sometimes I feel like I really missed out by not receiving a "classical" education. While I can probably live without the latin and greek philosophy, one area I've been keen to pick up is formal logic. The forming of a coherent and valid argument is a key skill which is, in my opinion, overlooked in safety management. Which is disappointing since making such an argument is at the heart of making a safety case.

I'm not going to tackle the subject of logic today. To be honest, I don't know enough about the overall concept. Instead, I'm going to focus on the typical failings present in a logical argument - the logical fallacies.

Image credit: Steve Johnson (via Pexels)

Integrating Runway Safety Teams with your Safety Management System

I've just spent an amazing week in Bali1 workshopping with operators and regulators from the Asia-Pacific region (and some from further afield) on the issue of runway safety. We got a lot of good information from the Flight Safety Foundation, ICAO and COSCAP as well as airlines, airports and regional regulators. The primary objective of the week was to provide information on and practice in the establishment and conduct of Local Runway Safety Teams (LRSTs). To this end, the seminars and workshop were great but I left feeling like one connection had been missed. The final question on my mind and many others, I am sure, was:

How do these runway safety initiatives integrate into my SMS?

Image: Agência Brasil

Levels. Levels? Yeah...

Seinfeld fans may remember this short exchange. Kramer might have been on to something and it had nothing to do with interior design. In my research and work, I've been butting up against a few theoretical roadblocks. But I am starting to think that these roadblocks are actually different levels. Internet guru*, Merlin Mann often observes that people need to solve the right problem at the right level. And now, I'm starting to think that is exactly what I need to do.

Work-Me & Blog-Me

AUGUST 2012 UPDATE: I've changed jobs since I posted this. However, I think it still works as a fair assessment of the relationship between this blog and my current job, which is not with the regulator. In this, the Web 2.0 world, connections can be made easily. There is no practical way to disconnect completely my blogging from my work.

And while my little disclaimer on the right is designed to create a barrier between the two, it probably doesn't address what has the potential to be a complex relationship.